Counterfeiting is commonplace on the Internet. Thanks to the use of artificial intelligence (AI) technologies, fakes are becoming cheaper, more believable, and easier for anyone to create and distribute electronically. But now there is a whole new dimension: deepfakes. They are already a major problem for our global community.

This article examines what deepfakes are and what positive and negative aspects they have. It then discusses ways to detect, protect against, and mitigate the effects of deepfakes. Finally, we will discuss how to deal with this technology – deepfakes are here to stay.

What are deepfakes?

“Deepfakes are defined as manipulated or synthesized audio or visual media that appear authentic and show people saying or doing something they never said or did, and that have been created using artificial intelligence techniques, including machine learning and deep learning.”

That’s the definition given by the Parliament’s General Directorate for Research Services to staff of the European Parliament in 2021 in an information note to support their parliamentary work.

How did deepfakes get named?

There are two different explanations for the name of this technology that appear in the media.

According to some sources, deepfake technology got its name from an anonymous user of the online community Reddit called ‚deepfakes‘. ‚Deepfakes‘ published pornographic images and videos of famous actresses in late 2017, and even posted the code to create them online. The videos were clicked and viewed by hundreds of users. For the online community, the pornographer’s profile name became synonymous with a synthetic medium that uses powerful AI technology and machine learning to create fake, extremely realistic-looking image and sound content.

Other authors describe deepfakes as a recently developed application based on deep learning. It can be used to create fake photos and videos that humans cannot distinguish from the originals. The term is a combination of deep learning and fake.

The technology behind deepfakes

Deepfakes are a subset of synthetic audiovisual media that manipulate or synthetically recreate images, videos, and audio tracks, usually with the help of AI and deep learning algorithms. How exactly does it work?

As early as 1997, the foundations of deepfake technology were laid. In the proceedings of the 24th Annual Conference on Computer Graphics and Interactive Techniques, scientists published a „Video Rewrite Program“. This program takes existing footage and creates a new video in which a person speaks words that are not in the original footage. This technique is used, for example, in dubbing movies to synchronize the lip movements of the actors with the new soundtrack. The resulting recordings are often impossible to tell apart from the original.

Until now, however, it has been very difficult to achieve high-quality manipulation of dynamic media such as video and audio tracks.

Today, as we enjoy sharing our videos and photos on social media platforms, each of us contributes to the availability of large amounts of audiovisual data on the Internet. Thus, AI can easily recognize patterns in large data sets and generate similar products.

Faking faces

Recent deepfakes are used to either swap faces in a video („face swapping“), control a person’s facial expressions in a video („face reenactment“), or create new identities.

In face-swapping, the face of a person who looks the same, moves the same, and faces the same way is used to create an image of another person. Using large software databases on the Internet, neural networks learn to filter out the important information about facial expression and lighting from an image and create a new image from it.

Face reenactment manipulates a person’s head movements, facial expressions, or lip movements to make them say something they would never have said in real life. A 3D model of the target’s face is created from a video stream. The manipulator can then control this model at will with his or her own video stream and create deceptively realistic facial expressions of the target person.

The synthesis of facial images is a process for creating new people who do not exist in reality.

All that is needed as training material is a few minutes of video footage of the target person. However, they must be of high quality and contain as many different facial expressions and perspectives as possible. This is important for the manipulation model to learn.

Faking Voices

There are now a large number of easily accessible artificial intelligence applications that can be used to create voice clones. AI voice cloning algorithms make it possible to imitate a human voice, i.e., to generate synthetic speech that is strikingly similar to a human voice. Methods known as „text-to-speech (TTS)“ and „voice conversion (VC)“ are commonly used for this purpose.

In text-to-speech, the user types a text. The TTS system then converts the text into an audio signal. Text-to-speech technology is already used in consumer electronics that many of us use every day: Google Home, Apple’s Siri, Amazon’s Alexa – and navigation systems also use the technology.

In voice conversion, the user gives the system an audio signal, which is then converted into a manipulated audio signal. Thus, it is possible to place words in the mouth of a person who has not spoken them, but only their video recording.

However, these methods have only been successful if the AI is trained with audio recordings of the target person that are of the highest and most consistent quality. But it’s not just the sound of the voice that needs to be convincing. It’s also the style and vocabulary of the target person. Voice cloning technology is therefore closely related to text synthesis technology.

Text synthesis technology

Text synthesis technology is used to enable new AI models to write long, coherent texts using large text databases and high computing power. This technology is based on a scientific discipline at the intersection of computer science and linguistics. Its main goal is to improve textual and verbal interaction between humans and machines.

At first glance, these texts are indistinguishable from texts written by humans. We already use this technology to write messages, generate blog posts, and even chat responses.

However, it can also be used to generate text which mimics the unique speaking style of a targeted person. This technology is based on natural language processing and uses neuro-linguistic programming, or NLP for short. NLP describes methods that use language and systematic instructions to change processes in the brain. The program is designed as a communication tool and can analyze large amounts of text. It is able to interpret language to a certain extent and analyze emotional nuances and expressed intentions. Based on the transcription of audio clips of a particular person, the program can create a model of that person’s speaking style. This model can then be used to generate new speech.

According to the definition of deepfakes, all these manipulated or synthesized audio or visual media are grouped under the term deepfake technologies. They can be used for different purposes and can have both positive and negative effects.

The good side of deepfakes

Artists, educators, advertisers, and technology firms use deepfakes to make digital content more engaging and personal. Various applications are used to create or modify voices, create virtual spaces, or create entire worlds.

In our day-to-day lives, we are already using AI technologies in a variety of entertaining ways to manipulate images and videos, which we then upload to a number of social media platforms. With the right app, you can insert your own face into movie and TV clips. Other apps change our voice, our appearance, or provide beauty filters for our photos.

In addition, popular social media platforms such as Instagram, TikTok, and SnapChat already offer the ability to change faces with face filters and completely rearrange video sequences with video editing tools.

In business, for example, we use the option to change the background of the room during video conferences. There are also numerous research applications of deepfake technology in the medical, therapeutic, and scientific fields, such as facial reconstruction or making mute people speak.

So are deepfakes harmless and all the fuss about them unjustified? No, the excitement about deepfakes is not unjustified.

To us, seeing often means believing. We tend to believe what we have seen with our own eyes and heard with our own ears. This makes it easy to fool the brain’s visual system with false notions.

The downside of deepfakes

Fake news, the manipulation of social media channels by trolls or social bots, and public mistrust of scientific findings are commonplace in news and media. The average person is no longer able to tell what’s fake and what’s not. Deepfakes can take the form of convincing misinformation or misleading information with the intent to cause harm.

We no longer know what is true, confuse facts with opinions, and have no trust in the news or science. For example, a 2021 empirical study showed that the mere existence of deepfakes increases distrust of any kind of information, true or false.

Rigged elections

False information is spread in the run-up to elections in order to unsettle citizens and influence their voting behavior. In 2024, there will be numerous elections around the world: For us Germans, local, state, and European elections are on the agenda. There will also be some important elections in Austria.

Vladimir Putin has already decided to get re-elected, while Bangladesh and Taiwan have just voted for new parliaments. Turkey has held its local elections, and India will elect a (new?) prime minister in late April, early May. In the United States, the election of the President will take place in November. The UK may have a new government before the end of the year, and Ukraine may also hold presidential elections.

„AI-generated content is now part of every major election, and particularly damaging in close elections,“ said Dr. Oren Etzioni, an artificial intelligence researcher and professor emeritus at the University of Washington. Oren Etzioni was one of the first to warn that artificial intelligence would accelerate the spread of disinformation online. He added that the goal is often to discourage people from voting by providing false information or sowing distrust.

Pre-election manipulation

American voters have already seen the impact AI can have on elections this year. During the primary campaign, a manipulated audio message (allegedly) from President Joe Biden was sent to New Hampshire residents to discourage them from voting.

Fake video, audio, and images are easy to create with generative AI, but very difficult to detect. AI-generated content provides an opportunity for any political actor to discredit their opponents or invent political scandals. This can ultimately lead to voters making wrong decisions based on false information.

Women are particularly targeted

According to a study published in 2019, researchers found approximately 14,678 deepfake videos online. They also found that 96% of them were used for pornographic purposes. Aside from celebrities, popular social media influencers and well-known internet personalities have become targets. For example, Emma Watson, Natalie Portman, and Gal Gadot are the celebrities most frequently hit by deepfakes.

Anyone can be at risk

This is not a risk that is limited to women. Deepfakes can also creep into schools or workplaces, as anyone can be put in absurd, dangerous, or compromising situations.

There are more and more cases of deepfakes being used to impersonate someone trying to open a bank account. People can use sophisticated algorithms to fake an ID and impersonate themselves in videos. Other concerns related to deepfakes include blackmail, identity theft, fraud by large corporations, and threats to democracy.

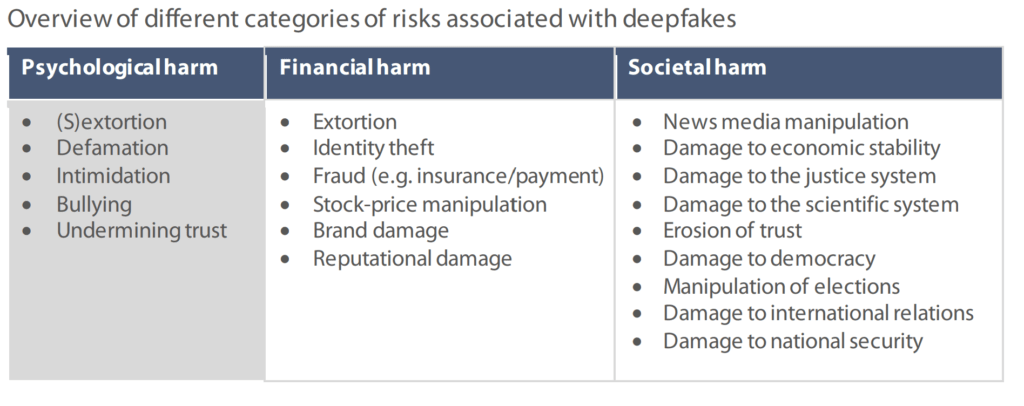

Three damage categories

Social media is increasingly used to spread fakes that look genuine. They target celebrities, politicians, and other public figures. This has consequences, of course. For the individual, for society, and even for democracy. Not only can individuals be harmed, but society as a whole can suffer.

The risks of deepfakes for all of us can be divided into three categories:

Taken from the European Parliamentary Research Service’s Future of Science and Technology Panel. Scientific Foresight Unit (STOA) PE 690.039 – July 2021.

How to spot deepfakes?

Some scientists point out that there are criteria we can use to determine if the image or video in question is a deepfake. However, the development of deepfake technologies and forensic detection methods is a cat-and-mouse game. By its very nature, the generative artificial intelligence that underpins deepfakes is constantly learning and evolving. This continuous cycle of improvement will make it increasingly difficult to detect fakes.

Here is how Dr. Etzioni puts it:“AI is developing at such a rapid pace, we’re nearing a point where people won’t be able to distinguish truth from fiction in images, video, and audio.”

So why are we still not using digital signatures?

When we want to know if a piece of information is genuine, we usually look at the source, such as a website, an email address, or even the origin of a phone call. But with most messages we receive via email, social media, or even the phone, we can’t be sure where they came from.

One thing we could have confidence in is the digital signature of the message. A digital signature can be used to prove that a document was not tampered with after it was signed. However, it is rarely used to confirm the authorship of private emails, social media posts, pictures, videos, etc.

Activating the signature in our email software, word processor, smartphone camera, and any other digital content is really not that difficult. When we receive a message that is particularly conspicuous, we take a look to see who has signed it. After all, we don’t accept checks that aren’t signed.

Even though such digital signatures will not prevent malicious software from writing fake messages under someone else’s name, our signatures will ensure that scammers will not be able to impersonate us and distribute content that we did not write.

Am I legally protected against deepfakes?

Fraud, defamation, extortion, intimidation and intentional deception are prohibited under our laws. The General Data Protection Regulation sets out comprehensive guidelines to combat illegal deepfake content. The right to one’s own image is closely linked to the right to privacy as set out in the European Convention on Human Rights (ECHR). A person’s image is „one of the essential components of personal development and presupposes the right to control the use of that image,“ the Court said. It is considered essential to a person’s identity and therefore worthy of protection. Procedures are already in place to mitigate the damage caused by deepfakes.

However, there is no such thing as real protection against deepfakes. Laws and regulations can only help limit or mitigate the negative impact of malicious messages. Yet, since there are usually several actors involved in the life cycle of a deepfake and the perpetrator acts anonymously, the legal process is a challenge for victims. It becomes nearly impossible for the victim to identify the perpetrator.

European AI Act

Deepfakes are usually based on AI technologies. Therefore, deepfakes are subject to the same rules and regulations as artificial intelligence technologies. The European Union has passed a law on artificial intelligence that also applies to deepfake technology.

The European AI law allows the use of deepfake technologies and imposes only a few minimum requirements. For example, the creators of deepfakes are required to label their content so that anyone can see that it is manipulated material.

However, the AI Act does not provide any measures or sanctions against manipulators who do not meet these requirements. This means that malicious individuals who anonymously create deepfakes to harm others usually go unpunished.

This is different when using detection software

Unlike the (private) use of deepfake technology to harm others, the use of deepfake detection software by law enforcement is permitted only under strict conditions, such as the use of risk management systems and appropriate data management and administration procedures. The use of deepfake detection software falls into the high-risk category because it could pose a threat to the rights and freedoms of individuals.

However, it is clear that measures that are sufficient for low-risk and benign deepfake applications, such as labeling or requiring transparency of origin, will not be sufficient to curb harmful deepfake applications.

How can we best fight deepfakes?

Based on current knowledge, it seems impossible to identify a deepfake video without detection tools. However, detection tools will only work for a limited period of time while deepfake technologies adapt.

However, there is another aspect of deepfakes that we can do something about, because in order to manipulate public opinion, deepfakes not only need to be produced, but they also need to be spread. And that is exactly where we have a role to play. Do we still trust every report, no matter how unusual, and forward it to our friends without a second thought? Media providers and Internet platforms are also of great importance for the impact of deepfakes.

The media landscape has changed and disinformation will never be completely eliminated. It is up to us to become more resilient and learn how to navigate the changing media ecosystem. It’s not just about how we identify deepfakes and counterfeits. We also need to learn how to construct a more trustworthy picture of reality.

#deepfakes, #AI, #deeplearning

The German translation of the article is here: Deepfakes im Internet – Vorsicht vor Fälschungen!